The Crowded Coffee Shop: Using Chatbots Safely with Company Data

Imagine you're discussing a top-secret company project with a colleague. Would you do it by shouting across a crowded coffee shop? Of course not. You have no idea who might be listening, what they might misunderstand, or how they might use that information later.

Using a public AI chatbot for sensitive work is very similar. When you enter information, you're having a conversation with a massive, complex system. The critical question is: what happens to your conversation after it's over? By default, some AI companies may use the conversations you have on their public tools to further train their models. While this helps them improve their AI, it poses a huge risk if your conversation contains confidential information.

Taking Control: How to Protect Your Conversations

Thankfully, the major AI companies understand this concern and have provided a powerful tool for you to protect your privacy. The single most important safety feature they offer is the ability to disable training on your data. This ensures your conversations remain private and are not fed back into the model for future learning.

Your Safety Checklist: 3 Simple Steps

Before you use any chatbot for work, follow these steps.

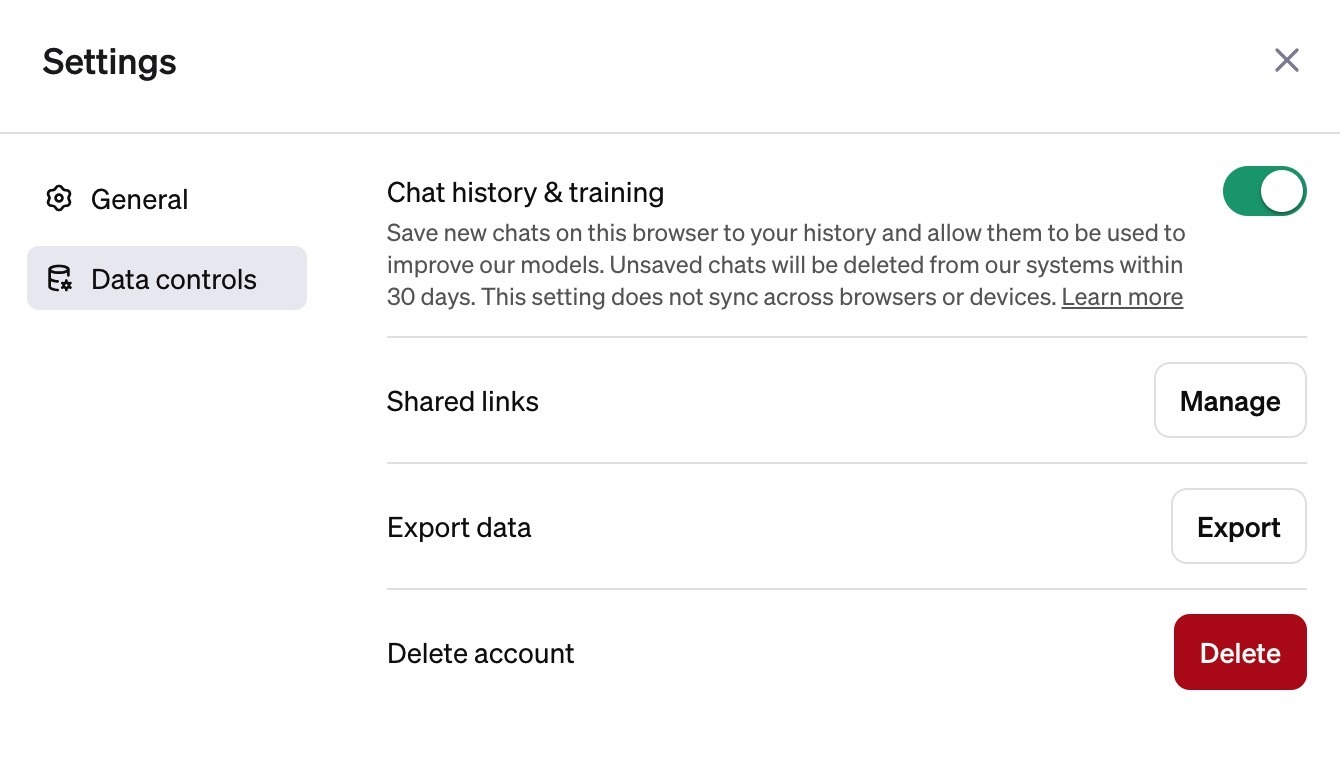

- Find the "Data Controls" or "Privacy" Settings. In your chatbot's interface (whether it's ChatGPT, Gemini, or Claude), look for the settings menu. Inside, you will find options for managing your data.

- Disable "Chat History & Model Training". This is the crucial step. This option, or one with a similar name, tells the AI company two things: "Don't save my conversation history" and "Don't use this conversation to train your models." All three major platforms—ChatGPT, Gemini, and Claude—offer this feature. For ChatGPT, this is a feature for paid subscribers.

- For Company-Wide Use, Insist on an "Enterprise" Plan. If your team or company is using AI, the best practice is to use a dedicated business or enterprise plan. These subscriptions are built for corporate use and come with much stricter, built-in security, privacy, and data-handling policies by default.

Visual Aid: The Privacy Toggle

You must click this toggle so that it is grayed out in the "OFF" position.

Quick Check

What is the single most important step you should take in your chatbot's settings before using it for any sensitive work-related brainstorming?

Recap: How to use chatbots safely with company data

What we covered:

- Using public chatbots with confidential data is risky because your conversations could be used for AI model training.

- The most critical safety step is to go into your settings and disable chat history and model training.

- All three major platforms (ChatGPT, Gemini, Claude) provide this crucial privacy feature.

- For official company use, always opt for a secure enterprise-grade plan.

Why it matters:

- Protecting confidential information is a fundamental responsibility. Knowing how to use these tools safely allows you to leverage their power without creating security risks for you or your company.

Next up:

- We'll learn how to make chatbots even more powerful and personal by using "custom instructions."